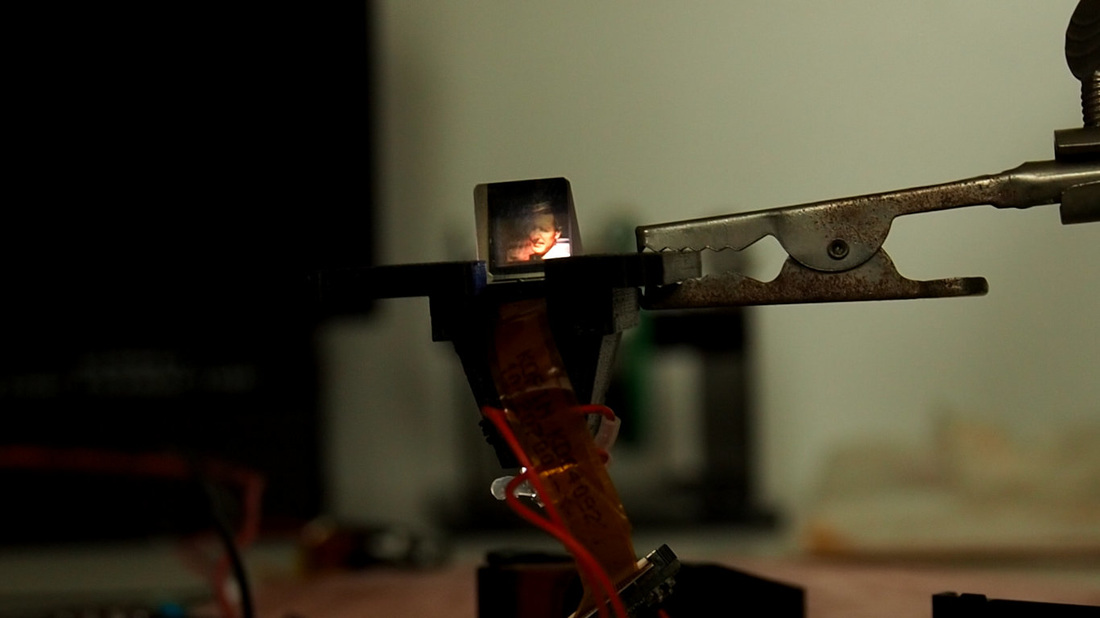

We had two days experimenting with inventor, Simon de Bakker of Commonplace to make a working prototype handheld viewfinder/video player as a moving-image-on-reality layering device. Inside there is a tiny lcd screen, a prism and a lens so that you can hold it close to your eye. The idea is that, if you keep both eyes open, the recorded image will merge with your view of the real environment.

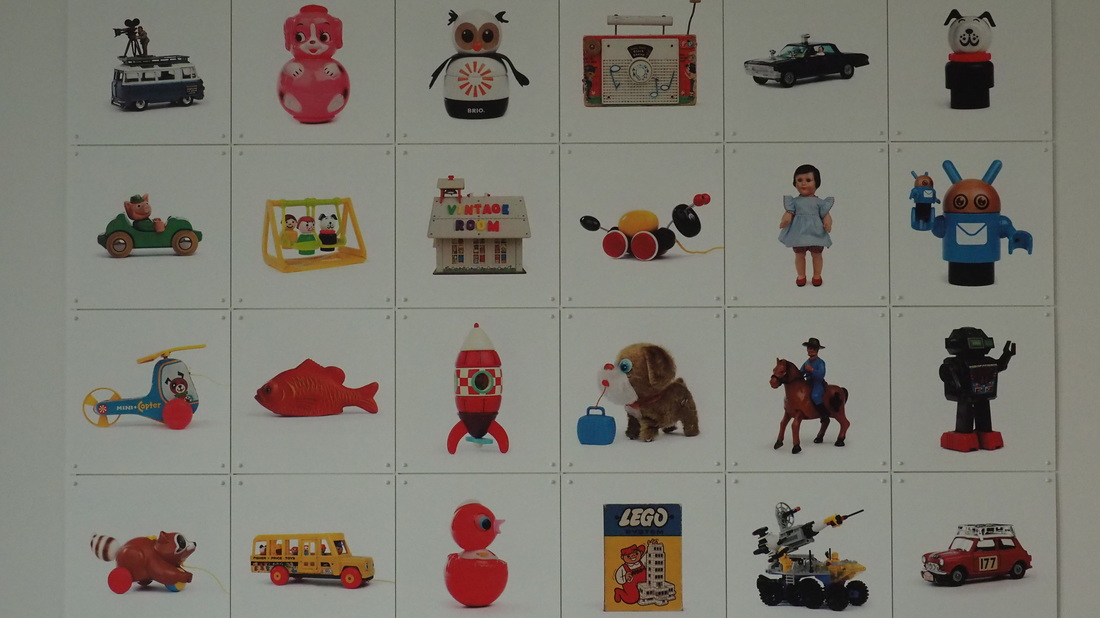

The first problem we encountered was that the scale of the image ended up distorted by the lens, so that even when taken at the same zoom as our eyes see it didn't match up with the view of the real environment. One solution was to take the image from further away from the subject to compensate for the distortion, so we experimented with distances and used a picture in grid form in Simon's living room to line up the image with.

It worked. By the end of the second day we had a working prototype and knew how to make image sequences to show on it. It also has a scrolling mechanism, like Commonplace's Bioscope.

We discovered that our brains are good at deciding which eye to relay information from, and at the moment the recorded image appears very faintly. We might benefit from a larger, brighter lcd screen that takes over a higher proportion of our field of vision. At the moment the lcd image is still too ghostly to relay clear point-of-view navigational information. We need to talk to someone who knows about optics and what how our brain interprets the visual input from our eyes.

We discovered that our brains are good at deciding which eye to relay information from, and at the moment the recorded image appears very faintly. We might benefit from a larger, brighter lcd screen that takes over a higher proportion of our field of vision. At the moment the lcd image is still too ghostly to relay clear point-of-view navigational information. We need to talk to someone who knows about optics and what how our brain interprets the visual input from our eyes.