|

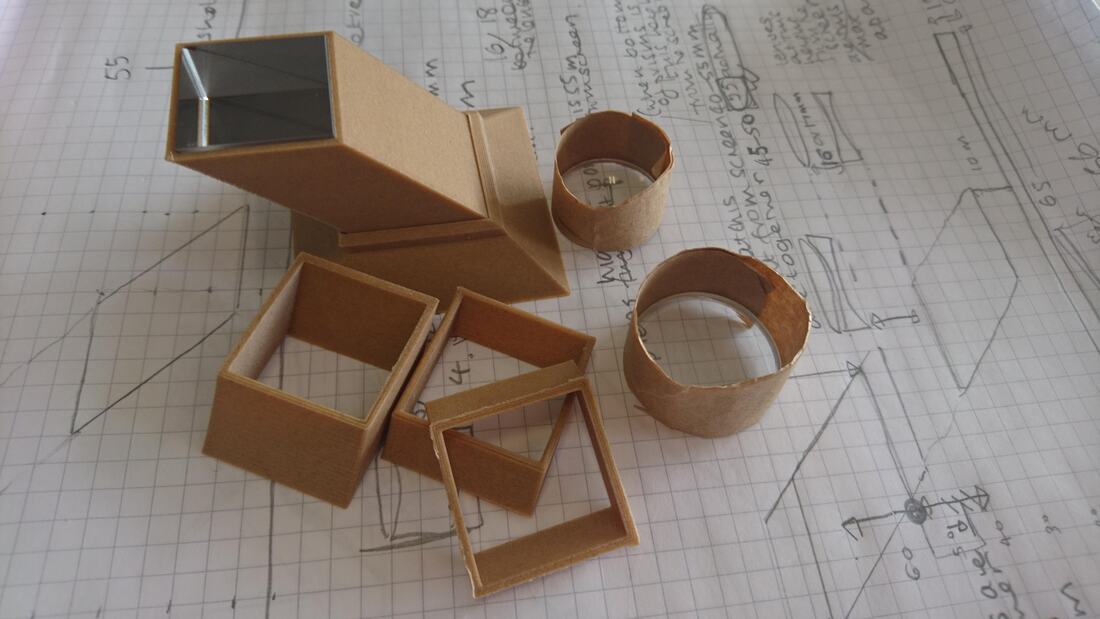

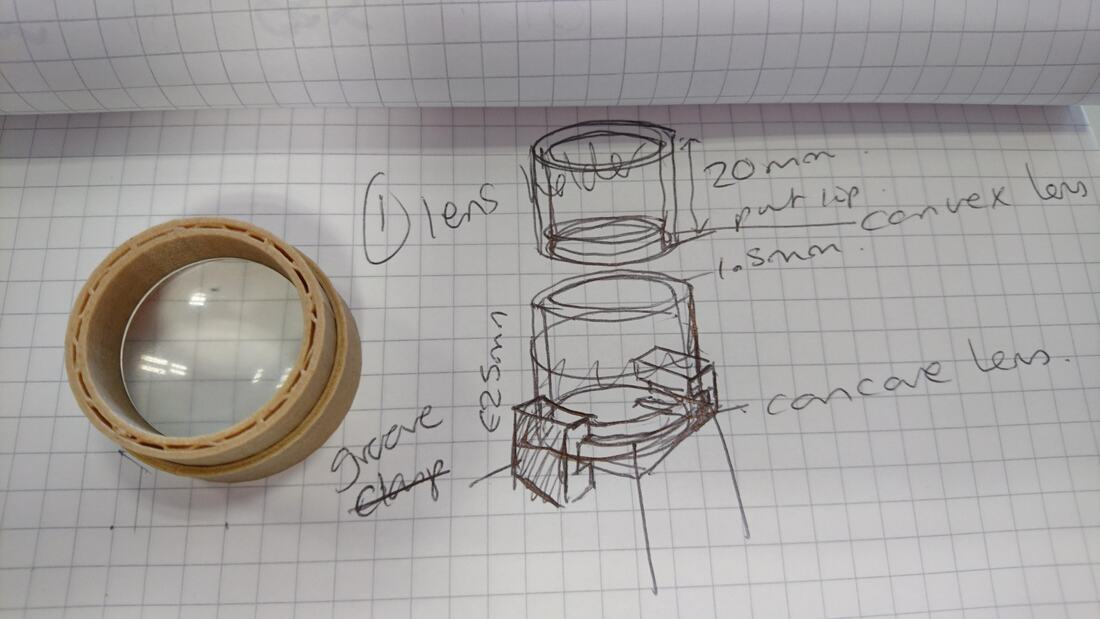

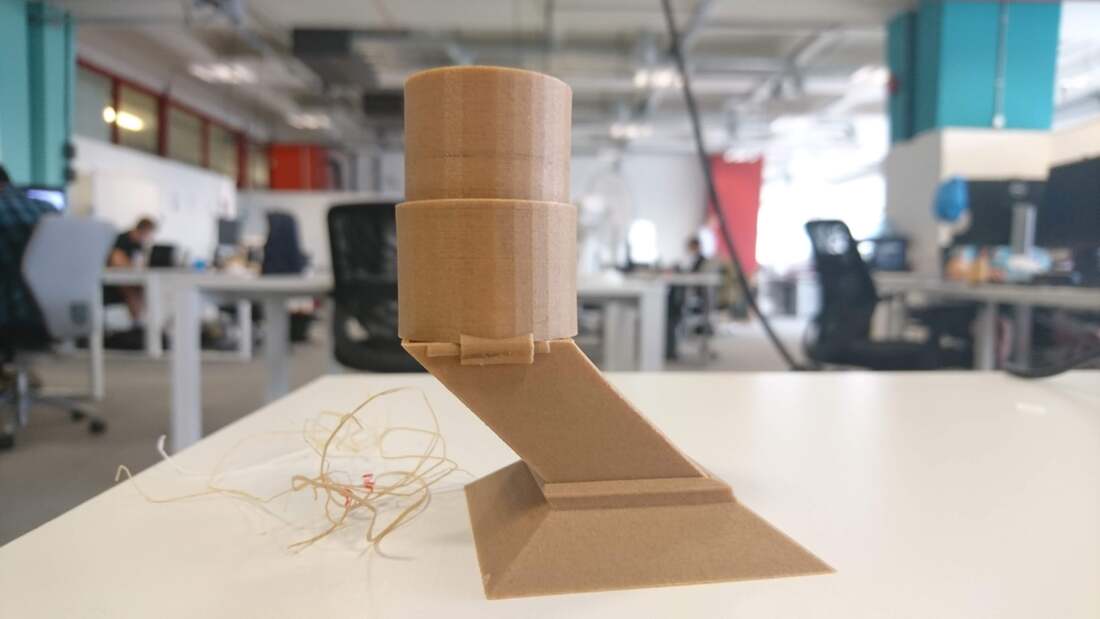

A project about friendship and exploration of our local area under lockdown. The limitation of walking in places I can only reach on foot has meant finding new paths and places in a familiar local landscape. The project was inspired by the realisation I had visited the same tree as a close friend on the same day but at different times. This ia a participatory project about being together while staying apart by leaving our presence for our friends to find. How to participate: Choose up to three good friends that you have missed seeing during lockdown. These are the people you will share your video with. Film yourself in an outdoor place. The footage should be: Around 30 seconds long Action rather than talking Shot in landscape rather than portrait and wide (but not fisheye) so we can see your surroundings Shot with the camera as still as you can. (Use a tripod or handy tree, or ask a family member to film it.) You can stage the action or just film whatever happens. Play around. Be natural. Keep it short or keep the camera running and edit afterwards. Find a simple way of registering your presence in that place. It could be as simple as walking along a path or climbing a tree. Using Whatsapp, share it with your friends send them the location. Your friend/s can answer with a video of themselves in the same place, or find a new location to film themselves. If the participants agree, the material generated may be collated into a located video documentary about the area under lockdown. info about me is at www.rachelhenson.com Then I worked with Maf’j to design and make 3D printed prism and lens holders which slotted together and different height bases using Blender in order to find out the optimum lens and prism configuration. I did basic Blender tutorials and then during our sessions Maf’j helped me learn how to alter the sizes of the 3D models as a practical learning exercise. We concluded that it is hard in Blender to alter measurements easily and accurately. It would be better to use something like Solidworks, a design software tool used by engineers. Body Rocket, a start up making sensors for bicycles, offered the use of their engineer and software for a short time, once I have finalised the design and measurements.

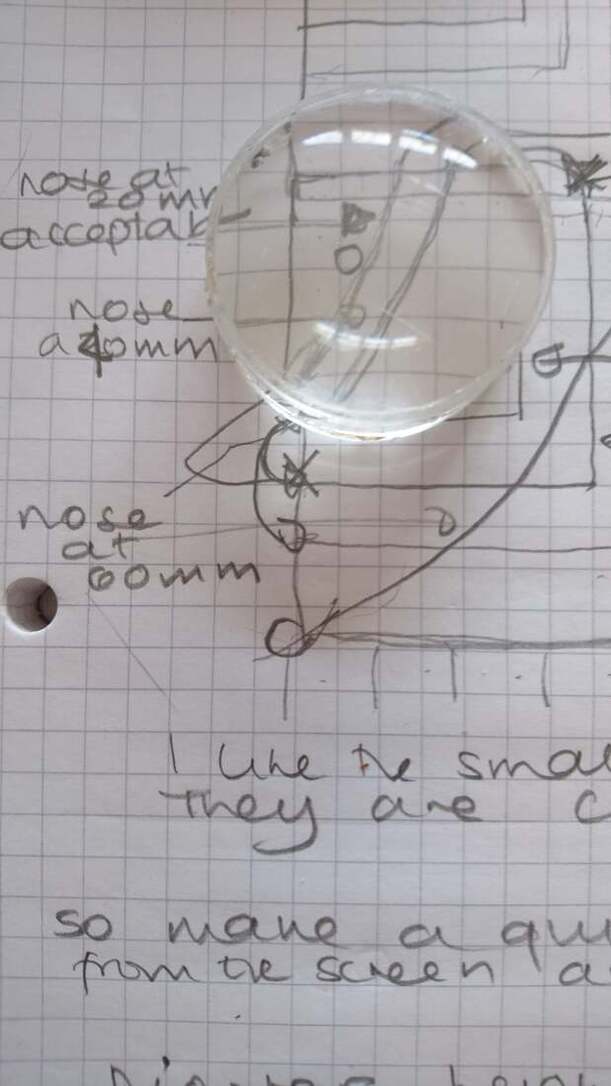

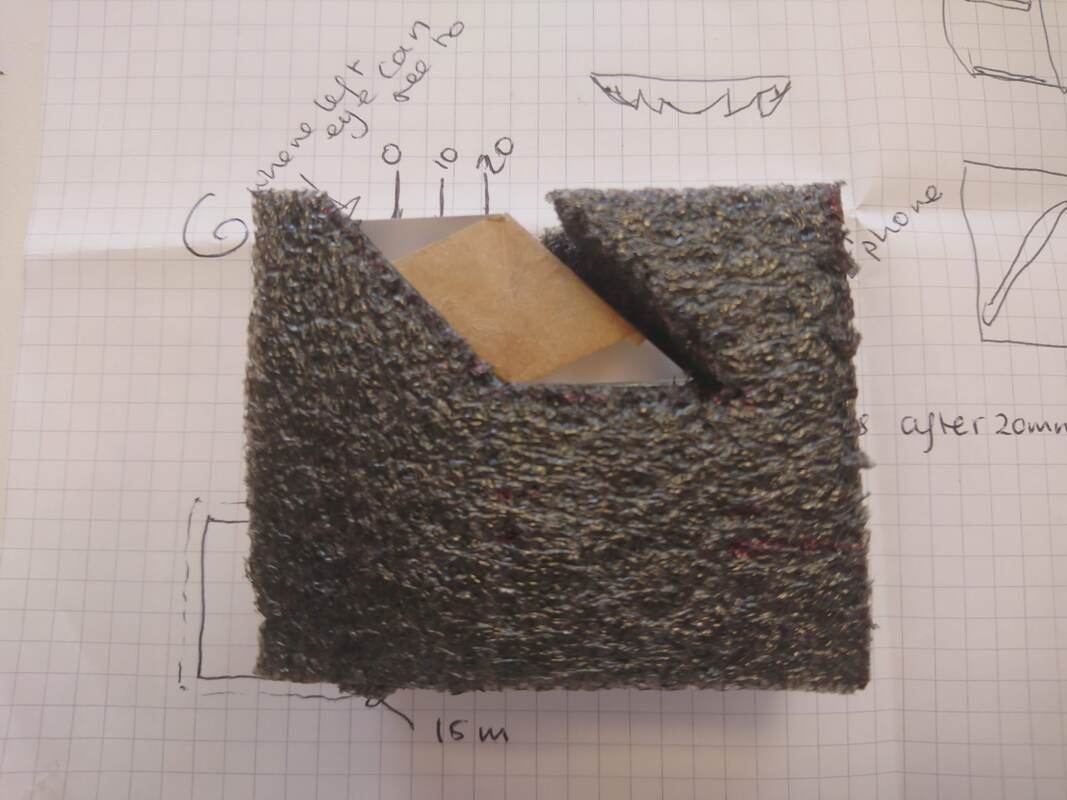

Maf’j found it difficult to merge the live and recorded views using the crude mirrorless Quizzer. This made me aware that I have trained my eyes and brain to be fast at binocular merging and to ignore large physical objects up close, becoming an expert without realising it. This prompted me to work on improvements to the Quizzer itself. I had noticed that when using the Quizzer without the mirror prism so that the phone’s camera can look forward, the body of the phone itself gets in the way of the binocular overlap. I decided to redesign the quizzer to optimise the overlap. Ideas I had included: finding lenses that focus nearer to the screen so the other eye can see around the phone more easily, putting another prism onto the phone camera so that it in effect looks forward when the body of the phone is at 90 degrees to the user’s eye, and finally to have two mirror prisms reflecting the screen like a periscope so that the viewer can look above or out to the side of the phone. Settling on the periscope idea, I sourced some larger prisms so I could reflect more of the screen for a larger field of view. I found it difficult to hold the prisms and lenses together in order to find the focal distance and to test the width of the overlap and the distance the lens should be from the screen, so I made a holder out of foam.

I was invited to show work at Encounter Bow, in association with Chisenhale Dance Space and so spent a day there to come up with an idea and a site. I did not end up being able to make work for it, but the visit made me think practically about what I could film live in public and where I could set up. Ideas included filming the Parkour performance in advance then setting up a viewing station so that people could view the demo superimposed on the site after it had finished or view previously recorded action over the live action. Another idea was to set up a walking portrait booth in an alleyway where people were invited to be filmed walking towards and away from the camera and watch themselves and others superimposed on the site, with elements where the live and recorded were staged to interact in interesting ways. I wanted to test an idea where the audience views a live performance merged through the Quizzer with previously recorded footage of that performance. I had noticed how interesting it was to when the viewer is not sure whether action is happening live or not. This pleasurable confusion seems to happen when the live performer and the performer in the video appear to start and finish actions in the same place. It would be interesting to design an experience around this premise. How about randomly mixing in footage recorded just moments before? This would involve setting up a viewing station in an outdoor location trained on some kind of durational action or natural animation of the scene. The app would record and play recently recorded sequences of the action. I tested this idea by sitting in a wood in the wind and recording footage of branches moving in strong gusts and then playing the footage back randomly using an existing app which shuffle plays video. This worked really well, was very effective and I want to develop it for an audience.  Maf’j and I made two experiments to further test this idea. We filmed each other playing her root bean game on a large piece of paper on a table. The repeated action of throwing, placing and drawing intricate lines was interesting when the real and recorded figure overlapped. The viewer forgot which was live and which was recorded, even though the quality of the video footage recorded on the phone was poor. We also tested this idea in the outdoor corridor filming each other taking different routes between different points on the floor as we walked towards the camera. The best way of taking this idea forward would be to craft a piece where the movements of a live performer are closely choreographed with the movements of a virtual (prerecorded) performer. sMarch 2019

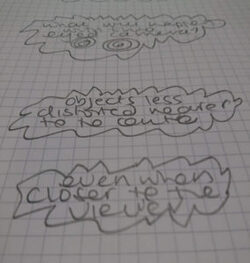

I met with Dr Keisuke Suzuki and Dr David Schwarzman, Postdoctoral Research Fellows at the Sackler Centre for Consciousness Science at Sussex University. They were involved in a VR experiment where a 360 film of the environment is presented to the viewer interspersed with a live 360 camera feed, as a way of testing what changes people notice, how accurately and the size of the changes they can detect. Demoing the Quizzer to them we discussed how it is easier to match a video with a live scene viewed through a camera than to match it with the real world, as the lens looks at the world differently from the eye. (How differently, is one of the things I have been trying to find out.) David suggested I think about using a cross to help users line up the video and the real world, something the optomotrists I have spoken to told me also. In fact, I usually tell the viewer what aspect of the scene to line up, and it always contains horizontal and vertical lines- in the Fusebox corridor, it is the square window in the door. Perhaps this aspect could first appear accented without the background scene using markerless AR to help the user find the right viewing position. Or maybe it's better to simply highlight the physical world - I could do this is a subtle way using paste-up graffiti. I learned the basics of Da Vinci Resolve, compositing natural light-on-water effects footage onto an image of the Fusebox corridor and viewing them through the Quizzer. This worked really well. I tried out the Artivive App using an image of the corridor as a markerless target to trigger the AR content. This was easy to learn and worked pretty well, but needs a strong internet connection I then invited Alex Peckham to work on an app which triggered content layered over the camera feed in relation to a compass direction. He came up with a rough prototype and also instructions on how I could change the content. This was another opportunity to wrestle with Unity and learn about creating and uploading android apps. I recced three possible routes and made mock ups of filters using footage of light-on-water. I developed a way of testing the filters in situ using the simple Quizzer. This was also useful for demonstrating how I wanted the filters to appear. I wanted to try making a filter over the camera feed showing how we think robin's 'see' the curve of the Earth's magnetic field, which is a different shape depending on the direction you are facing. To test the idea I looked for AR compass apps. The best ones exist only on iphones, but iphones displayed the camera feed at the wrong scale to match up with the real world through the current version of the Quizzer. Peter from VR Craftworks suggested I try Instagram filters. They worked really well through the Quizzer on my Sony phone. Revelation! Have been trying to test this for ages. Thought it meant full on app making, but no. The filter effect is perceived over the whole view, not just the view through the eye looking through the Quizzer. I noticed how people in the real world appear clearly but with a halo of the filter effects around them – my brain deciding what is most important for me to see. The ones that work best are particle effects, anchored shadowy objects and ones that warp the camera feed itself. This was very encouraging and I decided to experiment with my own content and learn how to make filters. The only problem is that the mirrorless Quizzer reduces the binocular overlap by around 20 degrees because the phone gets in the way when the camera faces forward. Possible ways of solving this new problem: find a lens that allows us to hold the phone closer to our eye, add an extra mirror, make a periscope, use a separate tiny monitor….. Following up my initial tests with Sara and the forest club I made some more footage out and about in Hollingdean. I chose the old skatepark because I love it and the huge meadow that leads to the dew pond. My kids provided the action. It was still horrendously difficult to line up, especially in the meadow and I struggled to define the exact nature of the problem. There were still too many variables: keeping the Quizzer still enough to stay matched up with the x, y and z orientation, finding the right viewing position and scaling the image. I had to zoom in a lot to approximate the right scale and this meant distorting the image which affected the match up. I decided to try some high res footage Nick Driftwood had made using six panasonic lumix cameras by the West Pier and bandstand on the seafront. Neil made buttons which would fix the x, y and z axes. This made it easier to make the initial match but it was still tricky to match up the position and scale of near and far objects in relation to each other. Why was this? I had thought that if you take a picture with a wide angle lens then the image becomes distorted in terms of perspective, but I found out from photographers’ forums that this is not the case. If you take a very wide and very close photo from the same position, objects will appear in exactly the same position in relation to each other. You can prove this by blowing up the wide photo to the same field of view as the close up one and comparing the two. However the nearer an object in the foreground the more accurate you have to be with the viewing position. A slight shift of position up down or side to side will change the position of the foreground object in relation to the background. So viewing position is key. Very wide lenses used in 360 photography do distort the image, but not in the way I thought. More research led me to tilt-shift lenses which correct perspective by physically tilting or shifting the lens. If you take a photo of a tall building the image will show it narrowing towards the top, something our brains correct for which means that through the naked eye, this narrowing effect is not in evidence. A tilt-shift lens will make the image look more like how we see it. Maybe this was the key? I took Neil down to the bandstand to test Nick’s 360 footage. He agreed with me about the variables suggested a test we could do. We would place crosses at different distances and take photos from different positions and view them through the mirrorless Quizzer to see where the distortion happened. To my surprise, even when we viewed a photo of a very near object it lined up perfectly with the background in position and scale if we viewed it from exactly the same position it was taken, it was just much harder to find that precise viewing position. Of course from a user experience point of view it’s probably better to make sequences without very near objects.  Michael Danks from 4ground Media looked into the event room and suggested we try with a 360 image taken with his “two eyed” camera, which takes very high quality photos from the left eye and right eye view points. The next task is to test them in the Quizzer. |

Archives

April 2021

|